Cosmos DB is Microsoft’s latest NoSQL database offering low latency, high scalability and geo-distribution to any Azure region. Read Microsoft documentation about Cosmos DB here or check out my blog about Introduction to Azure Cosmos DB. In its infancy, Cosmos DB was known as Document DB. Renaming was inevitable as Document DB evolved beyond just a document store.

Following is a simple note on how to export data from Cosmos DB to SQL Server. You may want a dump of data from a Cosmos DB collection to SQL Server for analysis, data integrity checks, troubleshooting a production issue or to derive insights. If you want to set up a real-time data pipeline from Cosmos DB to SQL Server, check out this post: – https://sqlroadie.wordpress.com/2019/07/21/azure-cosmos-db-real-time-data-movement-using-change-feed-and-azure-functions/

There are a few methods to export data from Cosmos DB. The quickest one is to use Document DB / Cosmos DB Migration Tool. This is a tool provided by Microsoft to migrate data TO/FROM various sources such as MongoDB, JSON, csv and SQL Server to Cosmos DB.

1. Use Azure Cosmos DB Migration tool to export data to json files:

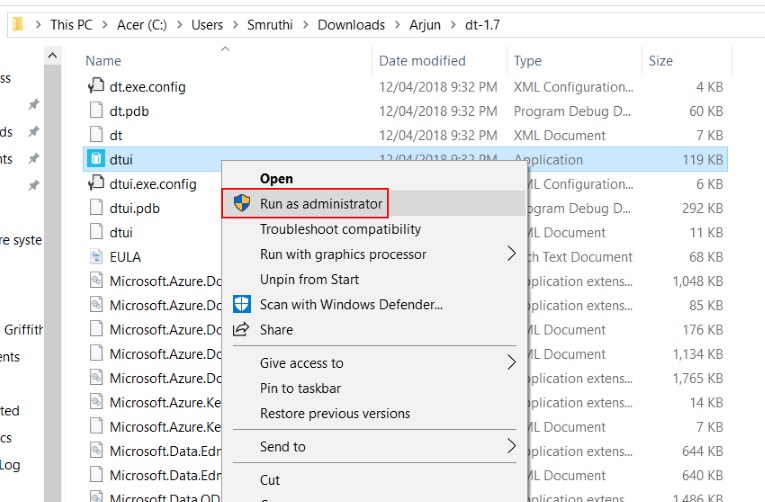

- Install the tool and launch as Administrator (use an Azure VM for best performance). Please be mindful of spike in RU costs when exporting data from your collection. To avoid throttling, scale your collection up as required just before you do the export or export in off-peak hours, if any. Leave a comment if you need any pointer on this.

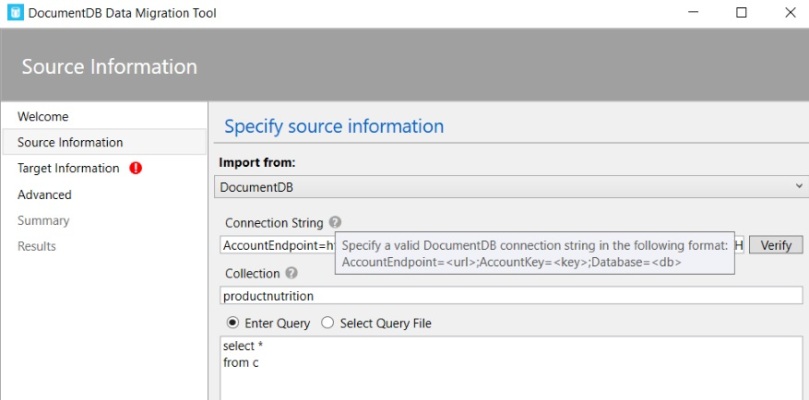

- Choose source – DocumentDB (CosmosDB aka DocumentDB)

- Specify connection string. You can find endpoint and key from Keys section of your CosmosDB account in Azure portal. Please note that CosmosDB is case-sensitive.

- A peek into Advanced options

- Include internal fields – for each document, Cosmos DB maintains a set of auto-generated internal/system fields such as _ts and _self. Their names start with an underscore, making it easy to differentiate them from user fields. This option lets you include internal fields in the output. This is handy, especially the _ts field, which indicates when the document was last updated.

- Number of retries on failure & Retry interval – Set a reasonable number of retries. In this case, I have used the value 1.

- Connection Mode – you want to use Gateway to get best performance and to bypass firewall rules.

- Specify target information. In this case, we want to export to a json document. We could either output to a local file or a blob.

- Error Logging. Set these options to enable error logging.

Hit Import and if there are no errors, you will soon have a new json file with all the data from your collection. If you want only a subset of data, all you need to do is modify your source query.

2. Import json files to SQL Server:

SQL Server 2016 introduced a JSON parse function called OPENJSON. If none of your user databases are upgraded to 2016 yet, but you have a 2016 engine, context-switch to a system database to use OPENJSON.

DECLARE @productNutrition varchar(max); --Read from the json file using openrowset SELECT @productNutrition = BulkColumn FROM OPENROWSET(BULK'C:\Users\Smruthi\Downloads\Arjun\productnutrition_20180427.json', SINGLE_BLOB) JSON; --Pass the variable containing json as parameter to OPENJSON function SELECT * FROM OPENJSON (@productNutrition) WITH ( ProductID varchar(20) '$.id', ProductDescription varchar(100) '$.description', ProductGroup varchar(200) '$.foodGroup', ServingAmount float '$.servings[1].amount', ServingUnit varchar(10) '$.servings[1].description', nutrients nvarchar(max) as json --note that json is case sensitive )

Give it a go yourself. If you have any question, leave a comment and I will be happy to assist.

If you are exploring Cosmos DB, consider reading my blog about Azure Cosmos DB – Partition and Throughput to get an overview of partitioning and scaling concepts.

[…] out my post about Cosmos DB migration tool if you are migrating data to or from Cosmos […]

Thanks for your post, can you help me with the next question?

In this part I have one error

–Read from the json file using openrowset

SELECT @productNutrition = BulkColumn

FROM OPENROWSET(BULK’C:\Users\Mig\file.json’, SINGLE_BLOB) JSON;

This is the error:

Cannot bulk load because the file “C:\Users\Mig\file.json” could not be opened. Operating system error code (null).

Hi Miguel, sounds like SQL service does not have access to the folder where the file is stored. I’d say try copying the file to a folder that SQL has access to. OS error code is not helpful in this case 🙂

Hi Arjun, Thank for the article. Very useful. What would your recommendation be if there is a requirement for a scheduled export from DocumentDB to SQL Server? For example get all new documents created/ updated yesterday/ last week etc. The downside of the Data Migration Tool is that it requires a user to trigger it. Would you used something like ADF?

Hi Cristina, I’m glad you found the article helpful. For a scheduled export from Cosmos to SQL, using Cosmos DB Change Feed with Azure Functions is a good idea. This is cost effective from a throughput perspective too. I’m writing an article on this topic, will try my best to publish this week.

Have you published the artice for scheduled export from Cosmos to SQl using Cosmos DB change feed with Azure function. Let me know the url for accessing that article

Hi Pradeep, yes, here it is.

https://sqlroadie.wordpress.com/2019/07/21/azure-cosmos-db-real-time-data-movement-using-change-feed-and-azure-functions/

Hi Arjun, Can you write a article how to do incremental load from Azure sql db to Azure Cosmos db.

I’m waiting for it.

Hi Prem, please refer to the following article from Microsoft:

https://docs.microsoft.com/en-us/azure/data-factory/connector-azure-cosmos-db

Let me know in the comments if it doesn’t suit your needs, I’ll be happy to write an article covering the missing aspects.

[…] Export data from Cosmos DB to SQL Server Arjun describes how to export documents to JSON and import all of them to SQL Server. […]

Hi Arjun, could you please write an article with detailed steps on data extraction from Cosmos DB using Talend ?

Hi Mayur, thanks for your interest. My bandwidth at the moment wouldn’t allow me to invest time in that. Do you use Talend Open Studio? I don’t see it being a big challenge as long as you construct your Cosmos DB query appropriately.

Hi,

I have more than 100+ colums with n number of rows and it stored Kafdrop to Azure Cosmos DB. Now I want to extract only limited columns from Cosmos DB toSQL server, so it’ll make easy to create dashboards using power bi.

Kindly help me out for this.

Hi Gopalakrishnan, you could refer to my article on Change Feed which achieves exactly this.

I am also writing a new article on doing this at scale, using Cosmos DB’s Synapse Link and achieving the same result using Synapse Analytics and Power BI.

Hi Gopalakrishnan, here is a link to the article on Synapse Link for Cosmos DB as promised.

Hi Arjun, is it possible to export from MongoDB (or CosmosDB) in such a way that a field that is an array of objects be converted to a child table? (In your example above, the table productNutrition would not have a field nutrients, but rather a second table, nutrients, would be created.) Thanks!

Hi Victor,

It is possible to create a second table, with a foreign key reference to the first table. You will have to run two separate exports if you want to achieve this.

Regards,

Arjun